I’m still reading The Pragmatic Programmer and this is one of several posts I’ve written about the experience.

“Your ability to learn new things is your most important strategic asset. But how do you learn how to learn, and how do you know what to learn?” (p.14) This is a question I’ve asked time and again to colleagues, mentors, and managers as I’ve sought to become a better engineer.

Pragmatic bases the discussion of developer growth on the concept of a “knowledge portfolio,” which is likened to a financial portfolio. And the tips given for building a “knowledge portfolio” do sound incredibly familiar:

- “Invest regularly . . . even if it’s just a small amount” (p.14)

- “Manage risk” (p.15)

- “Review and rebalance [regularly]”

- “Diversify . . .the more technologies you are comfortable with, the better you will be able to adjust to change.” (Having spent nine years at a previous job and recently started a new job, I’ve been feeling that one lately. Adjusting to change has been hard)

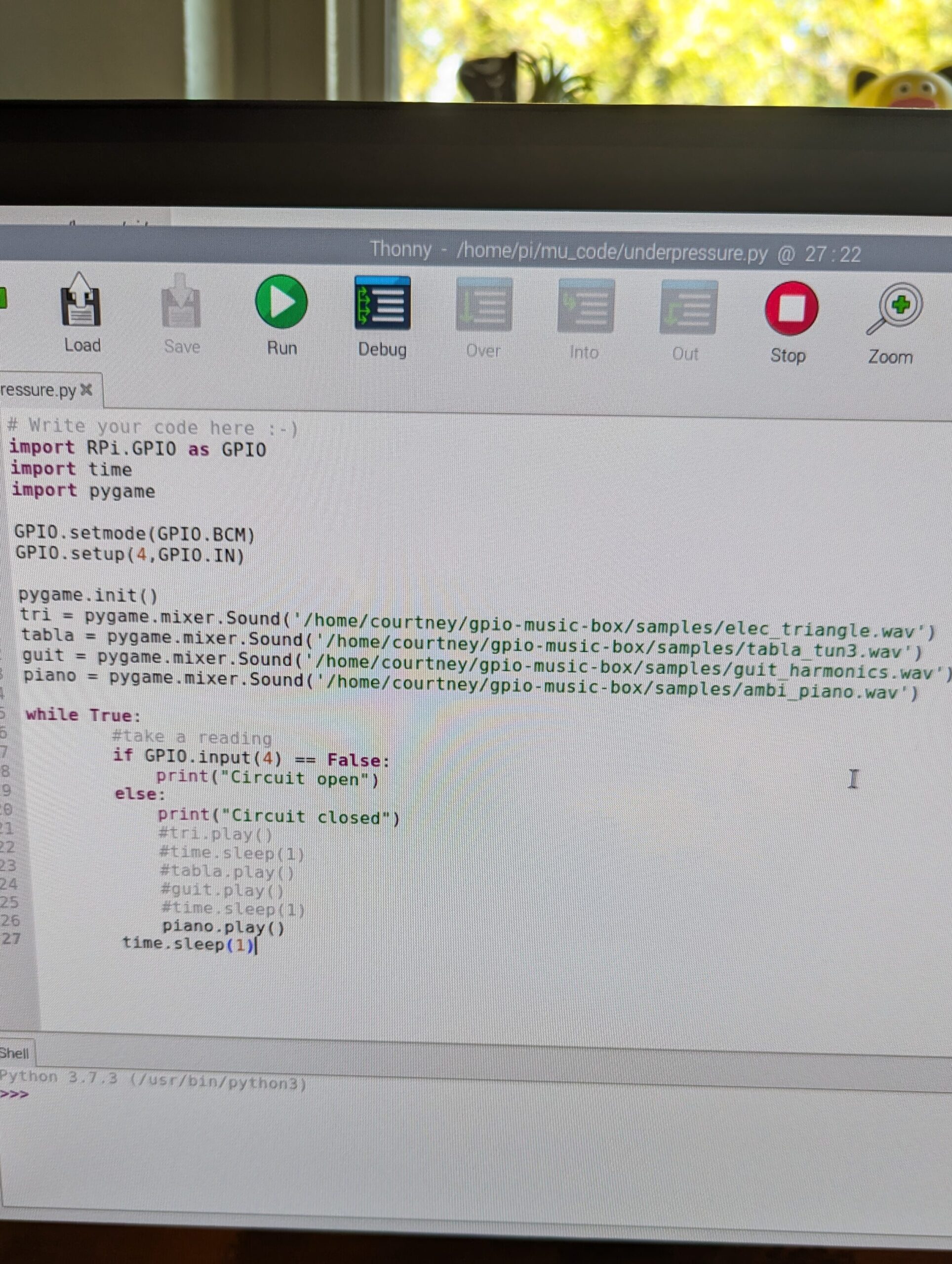

The hardest thing for me is continuing to invest once I’m comfortable (Well, with tech at least. Maybe this is where the financial comparison falls apart). It’s hard not because I don’t want to learn new stuff, but because when I start a side project, I want to have fun! I want to build something cool! Nothing kills the excitement of a shiny, new project more than getting bogged down trying to figure out some dumb setup or syntax.

I feel, surprisingly, inspired by Pragmatic’s response to this:

“It doesn’t matter whether you ever use any of these technologies on a project . . . The process of learning will expand your thinking, opening you to new possibilities and new ways of doing things” (p.16)

As the preface to this book declares, “This is a book about doing,” so I intend to take the tips and challenges to heart (or hand, I suppose) and take action. Here’s how I’m implementing some of the specific actions that Pragmatic recommends for developing a knowledge portfolio:

- “Learn at least one new language every year” – This brings me back to the existential-style questions I had in the beginning (what does it mean to have truly learned a language?). This time, I can lean on the inspirational quote above and just commit to investing in learning about a new language (though I am still interested in how different levels of knowledge about languages can be defined). My 2025 language is Typescript.

- “Read a technical book each month,” “Participate in local user groups and meetups” – I like to read, but a technical book each month is a steep start. I’m going to start with one each quarter instead. I’m intentionally reading Pragmatic slowly, so my May-July book is Looks Good to Me. I’m reading this one with a cute little online dev book club.

- “Stay current” – I follow TLDR’s newsletters, though I don’t always make time to read them each day. Working in an emerging technology group probably also helps.

- “Review and rebalance” – I setup a spreadsheet to track my investments and a quarterly calendar reminder to check on it. Once I reach a happy iteration of it, I’ll share a template here.

Happy coding!

Courtney